The Best Graphics Cards for Artificial Intelligence in 2024

- 1. NVIDIA A100 Tensor Core

- 2. NVIDIA H100 Tensor Core

- 3. AMD Instinct MI300

- 4. NVIDIA RTX 6000 Ada Generation

- 5. Google TPU v5e

- 6. NVIDIA A40

- 7. AMD Radeon PRO W7800

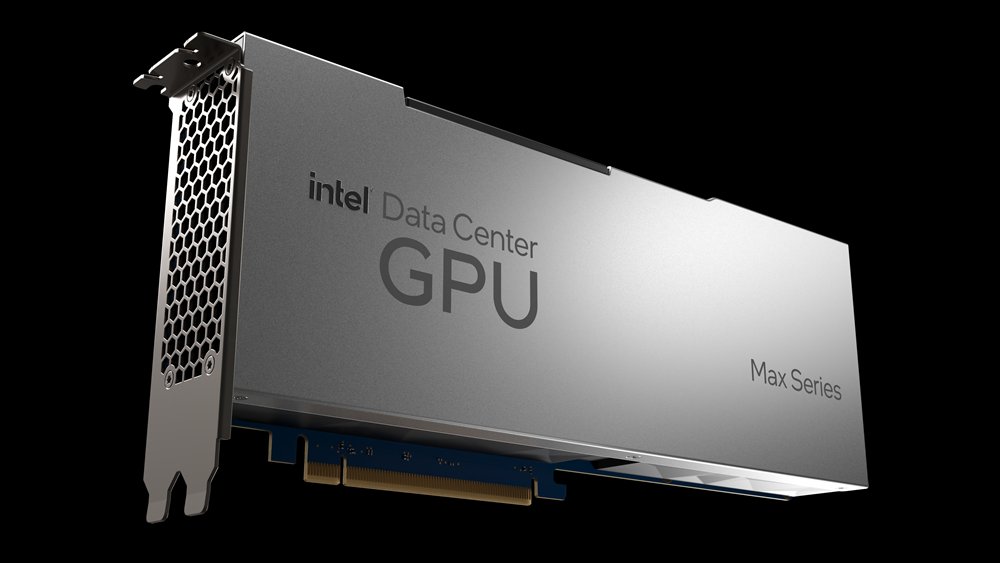

- 8. Intel Data Center GPU Max 1550

- Conclusion

Artificial intelligence (AI) continues to be one of the fastest-growing and most technologically advanced fields. To efficiently train AI models, specialized hardware is essential to handle the massive computations required. In this context, graphics cards (GPUs) play a key role, as they are designed to process large volumes of data in parallel, which is crucial for tasks such as deep neural network training.

1. NVIDIA A100 Tensor Core

Key Specifications:

- Architecture: Ampere

- Memory: 40 GB HBM2

- Tensor Cores: 432

- FP32 Performance: 19.5 TFLOPS

- Power Consumption: 400W

The NVIDIA A100 Tensor Core remains a top choice for AI workloads in 2024, especially in data center environments. This GPU is specifically designed for AI and high-performance computing (HPC), focusing on deep learning and big data analytics. With its 40 GB of HBM2 memory and 432 Tensor Cores, the A100 delivers outstanding performance for both large-scale machine learning training and inference.

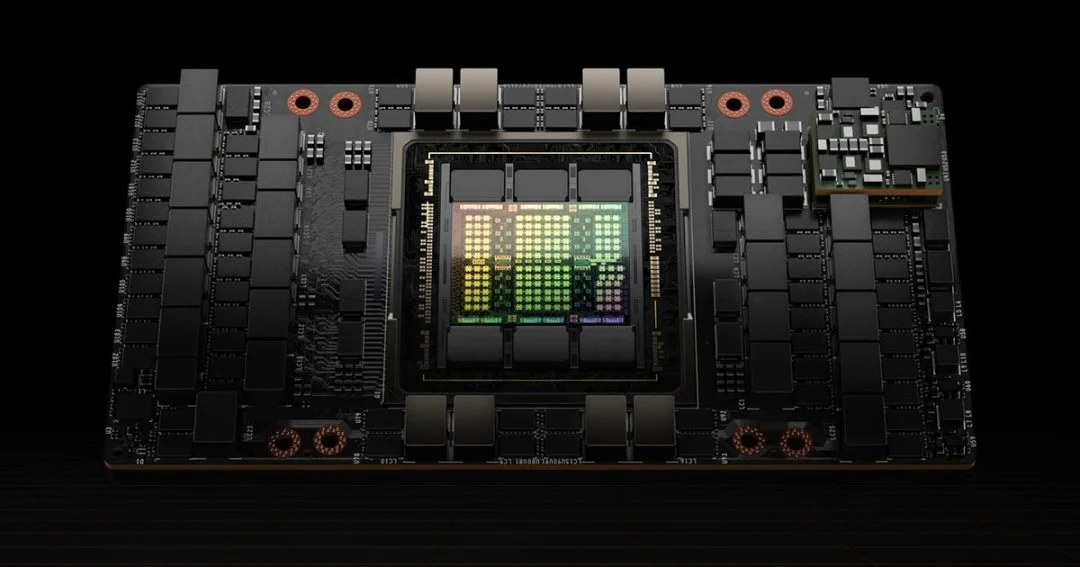

2. NVIDIA H100 Tensor Core

Key Specifications:

- Architecture: Hopper

- Memory: 80 GB HBM3

- Tensor Cores: 640

- FP32 Performance: 60 TFLOPS

- Power Consumption: 700W

The NVIDIA H100, based on the Hopper architecture, is one of the most advanced GPUs for AI tasks in 2024. With FP32 performance of up to 60 TFLOPS and 640 Tensor Cores, the H100 provides superior capabilities for training deep neural networks and running model inferences at high speed. Its 80 GB HBM3 memory enables handling extremely large datasets, making it an ideal choice for researchers and enterprises requiring cutting-edge performance.

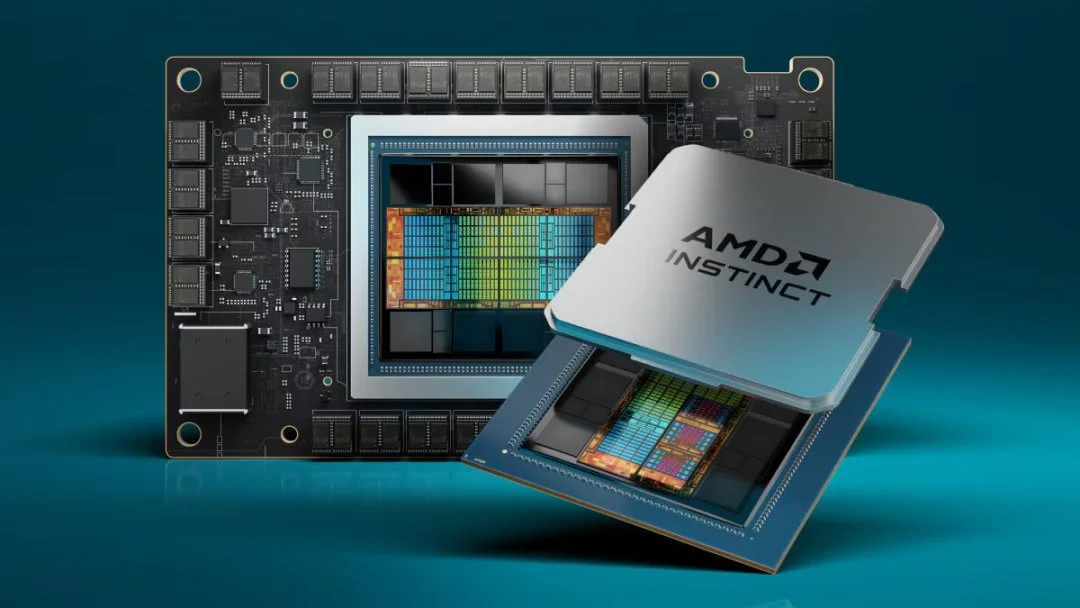

3. AMD Instinct MI300

Key Specifications:

- Architecture: CDNA 3

- Memory: 128 GB HBM3

- FP32 Performance: 383 TFLOPS

- Power Consumption: 600W

AMD has made a significant leap in the AI space with its Instinct MI300. This GPU is designed to compete directly with NVIDIA’s H100 series. With 128 GB of HBM3 memory and exceptional performance in mixed-precision computing, the Instinct MI300 is among the most powerful cards available in 2024. Its ability to handle large AI models and massive workloads makes it ideal for industrial-scale training applications.

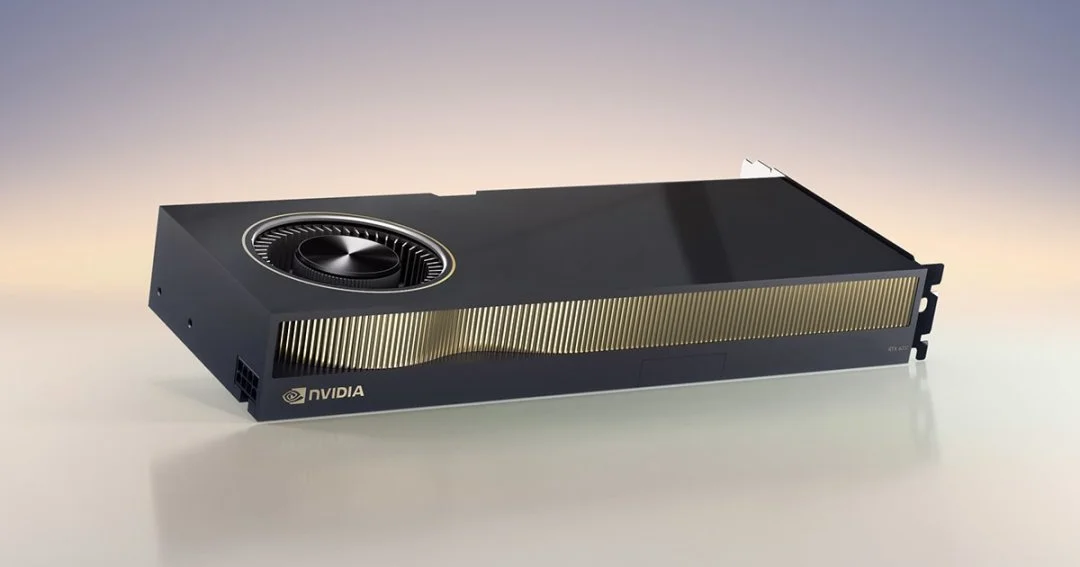

4. NVIDIA RTX 6000 Ada Generation

Key Specifications:

- Architecture: Ada Lovelace

- Memory: 48 GB GDDR6

- FP32 Performance: 91.1 TFLOPS

- Power Consumption: 300W

Although the RTX series is more commonly associated with gaming and graphics, the NVIDIA RTX 6000 Ada Generation has become a strong option for AI professionals seeking a high-performance GPU that is more affordable than data center alternatives. With its Ada Lovelace architecture and 48 GB of GDDR6 memory, this graphics card offers formidable performance for AI projects, especially in research and development.

5. Google TPU v5e

Key Specifications:

- Architecture: Tensor Processing Unit (TPU)

- Memory: 96 GB HBM2e

- Performance: Up to 100 PFLOPS (Inference only)

- Power Consumption: 400W

Although technically not a GPU, the Google TPU v5e is an accelerator specifically designed for AI tasks. This unit is extremely efficient at executing inferences on trained models, and its integration into Google Cloud makes it an attractive option for businesses seeking scalable solutions without investing in physical infrastructure. Its custom design and superior performance make it one of the best options for high-volume inference workloads in 2024.

6. NVIDIA A40

Key Specifications:

- Architecture: Ampere

- Memory: 48 GB GDDR6

- FP32 Performance: 19.5 TFLOPS

- Power Consumption: 300W

The NVIDIA A40 is another graphics card targeted at professionals seeking robust performance for AI tasks and advanced visualization. With an Ampere architecture and 48 GB of GDDR6 memory, the A40 is well-suited for AI training applications as well as complex graphics rendering. Its versatility makes it an excellent choice for businesses working on mixed projects involving both AI and visualization.

7. AMD Radeon PRO W7800

Key Specifications:

- Architecture: RDNA 3

- Memory: 32 GB GDDR6

- FP32 Performance: 45.24 TFLOPS

- Power Consumption: 260W

The AMD Radeon PRO W7800 is primarily designed for graphics professionals, but it is also an excellent choice for those needing an affordable yet powerful GPU for AI projects. With 32 GB of GDDR6 memory and FP32 performance of 45.24 TFLOPS, the W7800 offers competitive performance for AI tasks that do not require the highest level of processing but still demand an efficient and versatile GPU.

8. Intel Data Center GPU Max 1550

Key Specifications:

- Architecture: Xe-HPG

- Memory: 64 GB HBM2e

- FP32 Performance: 100 TFLOPS

- Power Consumption: 450W

Intel continues to expand into the AI market with its data center GPU series. The Intel Data Center GPU Max 1550 is designed to accelerate massive workloads in data center environments. With 64 GB of HBM2e memory and FP32 performance of 100 TFLOPS, this GPU is ideal for large-scale AI model training and data processing.

Conclusion

Choosing the right graphics card for AI in 2024 depends on the type of tasks you need to perform. High-end options like the NVIDIA H100 and AMD Instinct MI300 are unbeatable for large-scale model training. Meanwhile, Google TPU v5e is an exceptional choice for inference and cloud-based AI processing. If you're on a budget, NVIDIA RTX 6000 Ada Generation or AMD Radeon PRO W7800 are great alternatives.

Selecting the right GPU ensures faster and more accurate AI projects. 🚀

Jorge García

Fullstack developer